The myth of AB “test everything”

The "test everything" approach for website optimisation is unfortunately one of those popular myths that won't die. Here's how to better prioritise your AB testing to lift your website conversion rate this year

Author: Chaoming Li, Founder & CEO, ex-IAG

In this article:

- Why conversion rate success is increasingly determined by prioritised AB testing, not a "test everything" approach

- Explore the benefits of prioritising your AB testing with easy-to-understand experience data

- Walking in the shoes of our users is key but websites with 1M+ unique page views per month and 100,000+ replays are finding it hard to quickly find relevant recordings with the right help. We'll explore how to solve this problem.

Digital experience drives website revenue however there is no one size fits all strategy.

This is because each website uniquely attracts users with differing demographic, psychographic and behavioural characteristics.

Users are also unique in terms of where they are in their buying journey - some are ready to buy while others are just researching their options.

With so much to get right when optimising conversion rate it’s tempting to simply “test everything”.

Unfortunately, this approach is a popular myth. When testing everything you’re likely testing nothing of significance. Due to limited time and resources, I find companies don’t have enough people to test all opportunities fully.

— Peep Laja (@peeplaja) April 7, 2014

It's also worth noting, only 1 in 7 website AB tests are actually successful (VWO), increasing the risk of a “testing everything” approach.

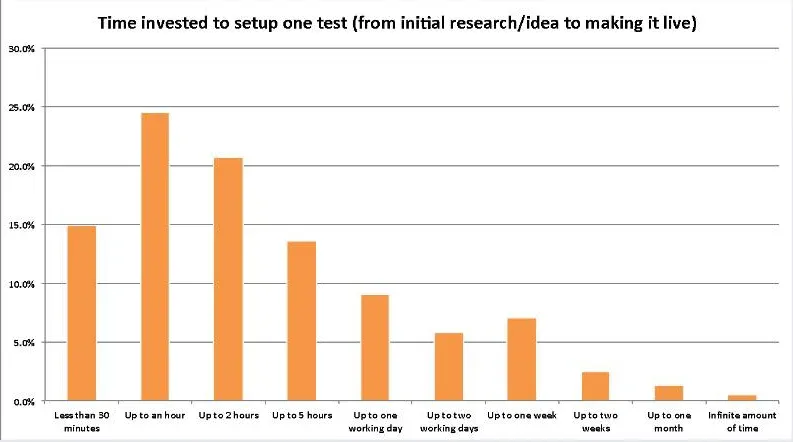

Setting up an A/B testing campaign could take days or weeks and involve a wide range of people from designers, marketing and even legal. Even when you’re ready to start experimenting, VWO says you’ll still have to wait an average of 2-4 weeks to collect enough meaningful data. These challenges represent a significant hurdle for website optimisation. So what’s the solution?

Test solutions not assumptions

I’ve worked in the digital analytics world for more than a decade and have first-hand experience with how prioritised AB tests can lift conversion rates. I’ve learned that guesswork increases the time it takes to achieve statistically significant results. I think organisations end up testing the wrong things because they start with a vague hypothesis rather than users' experience.

Hypotheses are guesswork. Solution-focused testing on the other hand improves conversion rates because it prioritises the experience of real users. Deloitte actually found high-quality experiences can lower the cost of serving customers by up to 33%. Customer-centric companies are also 60% more profitable (Forbes). If we can agree improving experience gets results then understanding how those experiences impact conversion rates is the logical place to start. Once we have data-backed insights we should quickly validate the best solutions we think will deliver the most revenue.

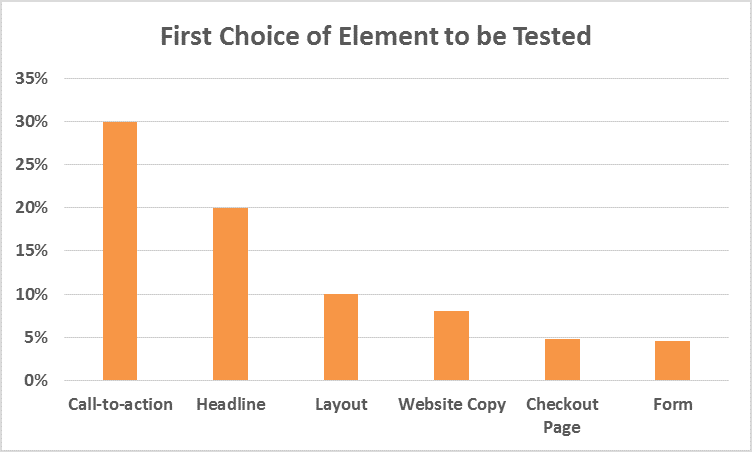

Not fully understanding the impact user experience has on conversion rates is increasing the risk we end up wasting time and money testing variations of inconsequential changes. For example, if conversion rates change, it’s unlikely this was because a call to action button colour was changed from blue to green. These experiments only serve to waste time but sadly will be included in a “test everything” methodology.

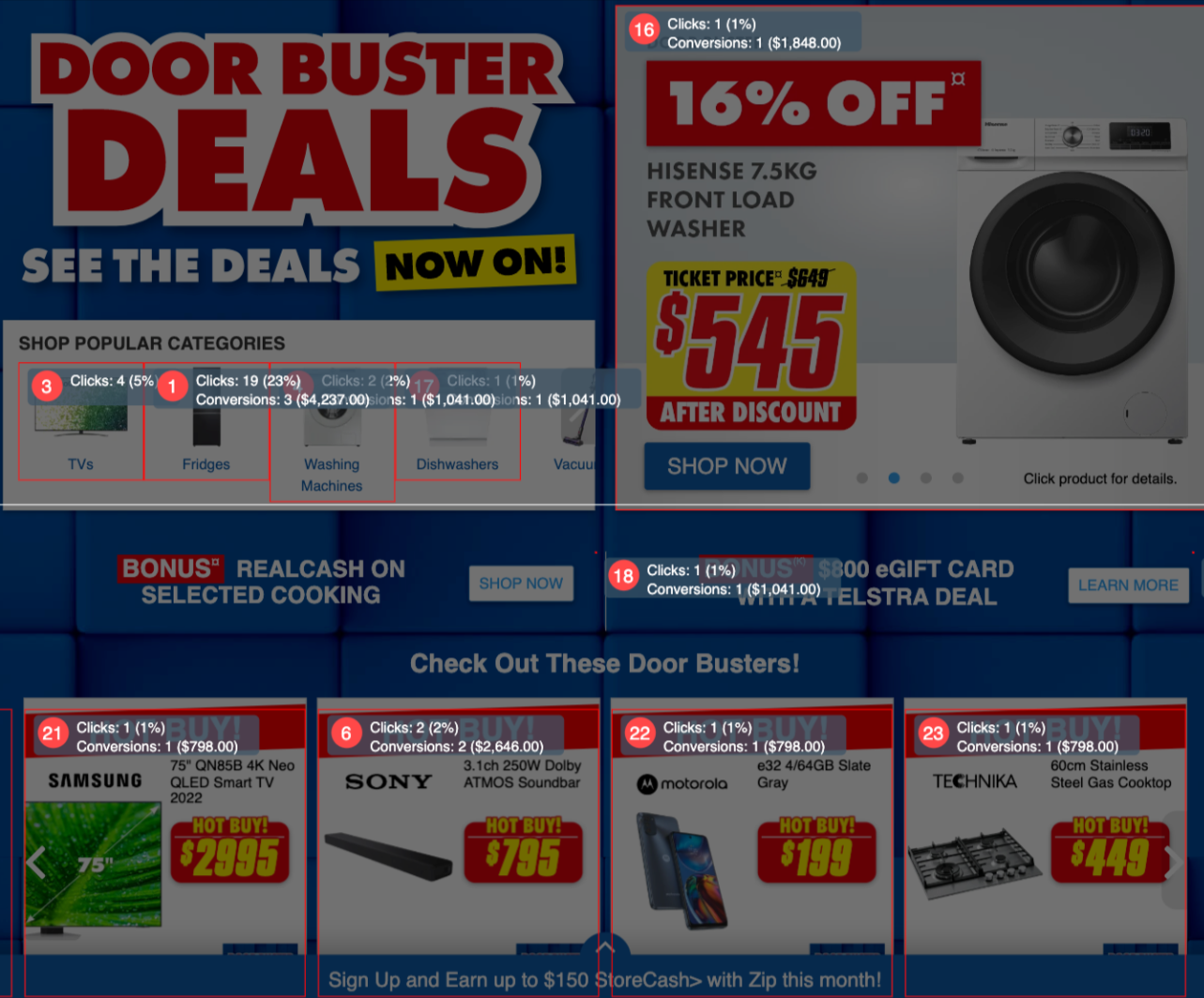

Benefits of prioritising your AB testing with easy-to-understand experience data

More recently a client asked me to help them figure out why bounce rates on a conversion form were higher than usual. This client mentioned they’d spent months AB testing the form design including adjusting the total number of steps and consolidating all the questions into one long single-page form.

Nothing seemed to work which was no doubt frustrating for them. After deploying a click map on the form and creating an audience segment for just users who bounced it became obvious very quickly what needed to be done.

Over 90% of bounced users navigated away from the form to manage their user profiles. The solution this client AB tested was a new navigation bar design to minimise the friction users experienced. The final result was an overall improvement in the bounce rate of that particular form. Scenarios like these pop up on websites all the time. Just when you think you’ve perfectly optimised your funnel by delivering a superior experience there will be a new challenge to find a solution for.

A lot of time users are simply blocked by simple errors like invalid coupon codes and get frustrated. Traditional AB testing struggles to quickly identify and resolve these issues. Instead, prioritise your AB testing by testing solutions you know will improve the digital experience of individual users.

Empathising with the experience of users is key

The most effective way to understand why users struggle in your conversion funnel is by walking in the shoes of your users and empathising with their pain. Session replay tools are great for this because you visualise user friction as they get stuck at key moments in their buying journey.

A key challenge though has always been with over 1M+ unique page views per month and 100,000+ replays it’s hard to quickly find relevant recordings to watch. It’s also important (but difficult) to know if the friction you see in a recording is experienced by all users, some users or just that individual user. Solving these problems was one of the reasons I founded Insightech so I could help other companies quickly identify where users are dropping off or experiencing difficulties. By watching the user experience we gain invaluable insight (using your own website data) to dig into the root cause of each friction point and formulate a solution to quickly validate with AB testing.

I’m also a big fan of democratising insights and making it easier for designers, developers and marketing teams to work from the same data. Talk to any professional working in digital and they’ll all agree with me that siloed data is holding their organisation back. People say one picture is worth a thousand words. Well, a session replay is worth even more. This has been one of the underlying principles we live and breathe at Insightech. It brings me great pleasure to see customers such as Westpac and IAG use our session replay, click maps, form analysis and funnel analysis tools to quickly test solutions and solve issues they discovered with Insightech.

What we’ve learned over the years is that conversion rates improve when companies know what their unique users need to see or interact with to be more likely to convert through their unique funnel.

Another reason is they’re able to quickly identify and solve at the operational level, website errors such as broken links or incorrect coupon codes etc. One of our customers Travello was actually able to unlock an additional $750,000 in revenue opportunity by using Insightech to quickly identify and get to the root cause of a payments page issue on their website.

It’s these quick but powerful wins that I’m most proud of and would love to see more companies benefit from.

To learn more I recommend following me on LinkedIn where I'll be sharing regular tips and tricks to optimise your website for maximum conversions this year.

If you'd like to see how Insightech works, one of my team would be happy to share what we've built for you on a quick call.

Schedule time directly into our calendar for a demo.

Until next time!